Did Lyudmila Navalnaya, the mother of the late opposition leader Alexei Navalny, accuse her daughter-in-law of being responsible for the politician's death? No, that's not true: The audio in the video making this claim exhibits characteristics indicative of voice cloning through artificial intelligence, and its source can be linked to a network of Kremlin-backed propaganda outlets.

The claim appeared in a video (archived here) on TikTok by @loralarisa1401 on February 22, 2024, under the title (translated from Russian to English by Lead Stories staff), "The address of Alexei Navalny's mother to Yulia Navalnaya." The audio of the alleged address (as translated) says:

Dear Yulia Borisova Ambrosimova [the maiden name of Yulia Navalnaya] now I want to address you. You last saw my son in February 2022. You have not been banned from entering Russia, no criminal case has been opened against you, but you have never come to visit him in two years. In fact, as of the spring of 2021, you are no longer married to my son and are publicly seen with other men. It was you who forced him to come to Russia when he had just come out of a coma and had poor judgment. It was you who forced Alexei to move over his entire property to you. You have even disinherited your own son, who for this reason no longer speaks to you. You have turned your daughter against your son. It was strange and wild for me to see you smiling, a few hours after Alexei's death, speaking on his behalf in Munich, how you became his voice after his death. You stopped neither out of decency, nor did you observe mourning. You immediately began trading his death. You are an evil and mean person. I despise you and forbid you to speculate and use my son's name.

This is what the post looked like on TikTok at the time of writing:

(Source: TikTok screenshot taken on Mon Mar 4 07:52:51 2024 UTC)

Examining the alleged audio address reveals multiple instances of distortion and a metallic tone in the speaker's voice, even in comparison to the two publicly known verified samples of her voice when, devastated and physically exhausted, she addressed Russian President Vladimir Putin demanding he return her son's body, on February 20, 2024, and February 22, 2024 (archived here).

The resemblance to Navalnaya's voice could hint at the potential use of widely accessible synthetic voice generators, like this one (archived here), to clone someone's voice (archived here).

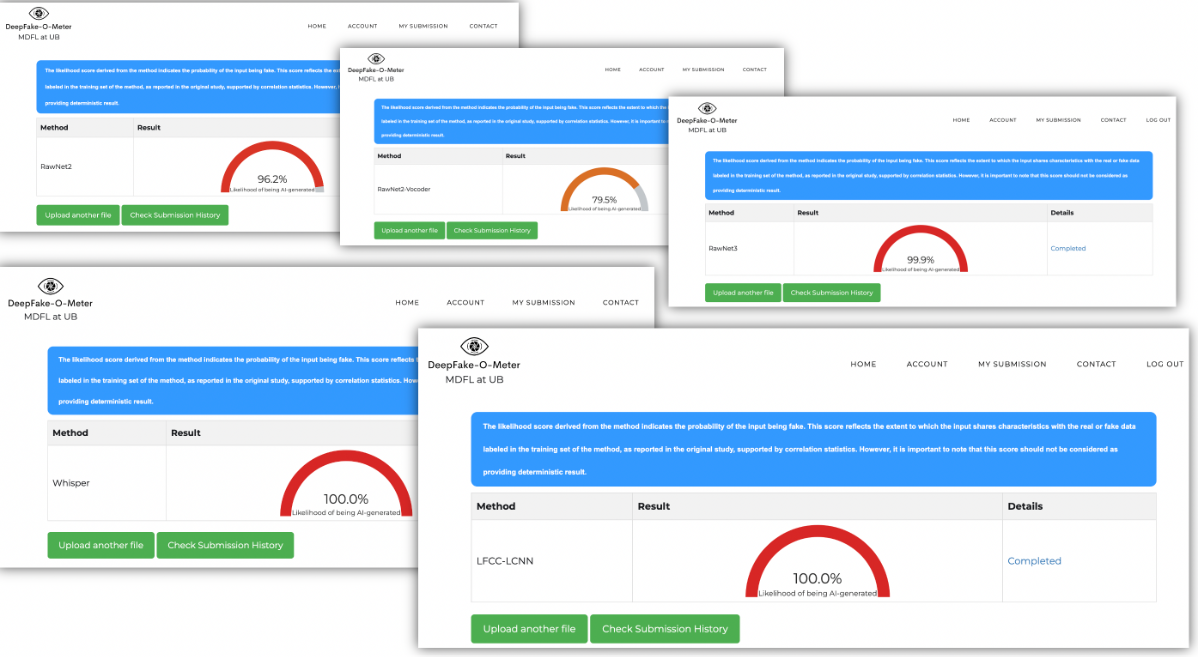

Lead Stories tested the recording using DeepFake-O-Meter, a tool developed (archived here) by the University of Buffalo to identify artificially generated content.

All five available models detecting audio fakes concluded that the voice of Navalny's mother from the post was AI-generated (click to view larger):

(Sources: DeepFake-O-Meter screenshots taken on Tue Feb 27 16:50:21-18:10:20 2024 UTC; composite image by Lead Stories)

A search for the phrase "Lyudmila Navalnaya message to Yulia Navalnaya," (archived here) conducted on March 4, 2024, using Google News' index of credible news sites, did not yield any evidence-based reports on this claim. If this were the case, it would be news of significant importance reported in international media.

During his interview with TV Rain on February 23, 2024 (at the 10-minute mark), Ivan Zhdanov, the head of the Anti-Corruption Foundation founded by Navalny, also confirmed that the video address was generated using artificial intelligence.

The fake address of Navalny's mother was massively spread by pro-Kremlin channels on Telegram commonly referred to as Z-bloggers for promoting and justifying Russian aggression in Ukraine: for example, this post (archived here), this post (archived here), this post (archived here) and this post (archived here). The file was also shared (archived here) by the Russian ultra-conservative pro-Orthodox Church TV channel Tsargrad and several other websites like this one (archived here) routinely utilized for broadcasting the Kremlin agenda.

Russian journalist Maxim Kononenko issued an apology on his Telegram channel (archived here) for sharing the "video address," acknowledging that it had not been verified as authentic.